In the digital age, cybersecurity is a concern for everyone. From individuals to large corporations, understanding the threats we face is the first step towards protecting ourselves. In this blog post, we'll explore key points of cybersecurity, focusing on business logic attacks, online fraud, malware, and the evolution of firewall technology.

Business Logic Attacks: The Devil is in the Design

Business logic attacks are a unique breed of software vulnerabilities. Unlike common bugs that can be patched, these attacks exploit core design flaws in an application. These flaws could be anything from predictable user names to weak password policies.

For instance, if a website uses a predictable pattern for user identifiers, like firstname.lastname@company.com, an attacker can use this information to perform a dictionary attack on an account. Similarly, if a website's password recovery questions are easily researchable (like the name of your high school published on LinkedIn), an attacker can use this information to gain access to your account.

The best way to prevent these attacks is to address security in the design phase of software development. By incorporating security stories into the development process and engaging information security teams early on, developers can identify and address potential vulnerabilities before they become a problem.

Online Fraud: The Ever-Evolving Threat

Online fraud is not a new threat, but it's one that's constantly evolving. With over 90 billion e-commerce transactions made in 2016 alone, the potential for fraud is enormous.

Attackers are now using machine learning and artificial intelligence to adapt and communicate with victims automatically. They're also using social engineering techniques, like phishing and spearphishing, to trick users into giving up their sensitive information.

Malware: The Silent Threat

Malware is another major cybersecurity threat. From viruses and worms to ransomware, malware can cause significant damage to a system. One of the most concerning trends in malware is the ability to change data to different values altogether surgically.

Imagine if an attacker could change a stoplight at a major intersection from red to green on-demand or disable your car's brakes while you're driving down the freeway. With the rise of the Internet of Things (IoT), these scenarios are becoming increasingly possible.

Evolution of Firewall Technology

To combat these threats, firewall technology has evolved significantly over the years. From traditional Intrusion Detection System (IDS) and Intrusion Prevention System (IPS) technology to Next-Generation Firewall (NGFW) technology, these systems are designed to protect our networks and systems from attacks.

However, these systems are not infallible. Attackers can use various techniques to evade detection, like packet fragmentation, encoding, and whitespace diversity.

This is where Web Application Firewall (WAF) technology comes in. WAFs are designed to protect HTTP applications by analyzing transactions and preventing malicious traffic from reaching the application. They can detect and address application layer attacks, like SQL injection and Cross-Site Scripting (XSS), and provide URL, parameter, cookie, and form protection for applications.

Web Application Firewalls (WAFs) are a crucial part of any cybersecurity strategy. They serve as the first line of defence for applications, detecting and mitigating a wide range of threats. However, they could be more foolproof and should be deployed alongside other complementary technologies for a robust defence-in-depth strategy. Let's dive into the world of WAFs and understand their capabilities, how they work, and the emerging trends in this space.

Core WAF Capabilities

WAFs are designed to detect and mitigate threats by analyzing data structures rather than relying on exact dataset matches. This is achieved through the use of heuristics and rulesets. These rulesets can be configured to consider various information such as the country of origin, length of parts of the request, potentially malicious SQL code, and strings that appear in requests.

WAFs and XSS Attacks

Cross-site scripting (XSS) attacks are a significant risk to businesses and consumers. Developers can prevent these attacks by validating user input and using output encoding. However, even with these best practices, vulnerabilities can still exist due to third-party libraries or software development processes that you don't control.

An attacker first needs to find an XSS vulnerability before they can exploit it. They can use tools like web application vulnerability scanners and fuzzers to find these vulnerabilities automatically. Once a vulnerability is found, the attacker can inject malicious scripts into the web application.

WAFs and Session Attacks

Session tampering is a significant threat that can allow attackers to manipulate session data and potentially gain unauthorized access to a system. WAFs can help mitigate these attacks by digitally signing artefacts such as cookies and ensuring users are communicating only with servers that have valid digital certificates.

Minimizing WAF Performance Impact

WAFs are deployed inline, meaning they are directly in the line of traffic. Therefore, it's crucial to ensure that they are engineered, designed, and deployed properly to avoid introducing incremental latency. Modern WAFs should be equipped to match or outpace the speeds of the Layer 2-3 devices that feed them.

WAF High-Availability Architecture

High availability (HA) is a critical aspect of any WAF solution. It's important that the components within the appliance are fault-tolerant from the outset. After addressing HA within the device itself, HA across devices is required. WAF deployments should support multiple horizontally scheduled devices to provide for HA and allow for sufficient horizontal scaling to accommodate any required network throughput.

Emergent WAF Capabilities

As technologies advance, attackers continue to take advantage of new capabilities to advance their agendas. WAF vendors are starting to add integrations with adjacent solutions and incorporate WAF technology into existing technology trends such as DevOps, Security Information and Event Management Strategy, containerization, cloud, and artificial intelligence.

WAFs Authentication Capabilities

WAF solutions allow you to implement strong two-factor authentication on any website or application without integration, coding, or software changes. This can help protect administrative access, secure remote access to corporate web applications, and restrict access to a particular web page.

Detecting and Addressing WAF/IDS Evasion Techniques

When evaluating WAF technologies, it's important to test for core attack vector coverage and how well the solution addresses WAF evasion techniques. Some examples of WAF evasion techniques include multiparameter vectors, Unicode encoding, invalid characters, SQL comments, redundant whitespace, and various encoding techniques for XSS and Directory Traversal.

Virtual Patching

Virtual patching is a quick development and short-term implementation of a security policy intended to prevent an exploit from being successfully executed against a vulnerable target. It can help protect applications without modifying an application's actual source code. Virtual patches need to be installed only on the WAFs, not on every vulnerable device.

WAFs are a crucial part of any cybersecurity strategy. They offer a robust defence against a wide range of threats and are continually evolving to keep up with emerging trends and technologies. However, they could be more foolproof and should be deployed alongside other complementary technologies for a robust defence-in-depth strategy.

WAF Components

WAFs are an essential part of any cybersecurity strategy. But they work with others. They're part of a team that includes other technologies like API Gateways, Bot Management and Mitigation systems, Runtime Application Self-Protection (RASP), Content Delivery Networks (CDNs), Data Loss Prevention (DLP) solutions, and Data Masking and Redaction tools. Each of these technologies plays a unique role in securing your applications and data.

API Gateways

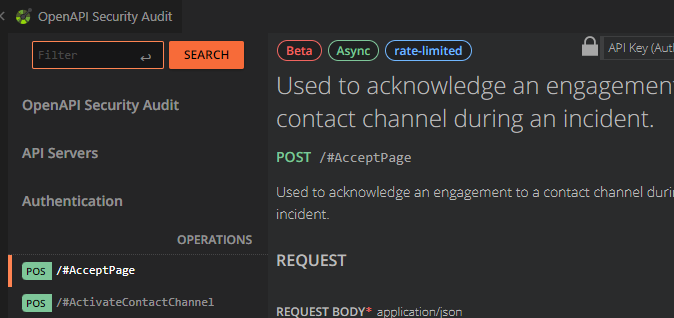

API Gateways are like the bouncers of your application. They control who gets in and who doesn't. They protect your internal APIs and allow them to be securely published to external consumers. They can also do protocol translation, meaning they can receive a REST request from the internet and translate that into a SOAP request for internal services.

Bot Management and Mitigation

Bots are like the little minions of the internet. Some are good, like search engine bots that index web pages. But some are bad, like bots that generate mass login attempts to verify the validity of stolen username and password pairs. WAFs can help deal with certain types of bots, but for more advanced bot threats, you might need a specialized bot mitigation and defence device.

Runtime Application Self-Protection (RASP)

RASP is like a bodyguard that's always with your application. It's embedded into an application's runtime and can respond to runtime attacks by replacing tampered code with original code, safely exiting or terminating an app after a runtime attack has been identified, or sending alerts to monitoring systems.

Content Delivery Networks (CDNs) and DDoS Attacks

CDNs are like the delivery trucks of the internet. They distribute cached content and access controls closer to the users that consume them. They can also help protect against DDoS attacks by absorbing the attacks and minimizing the performance impact on the actual web servers.

Data Loss Prevention (DLP)

DLP solutions are like the security cameras of your data. They ensure that sensitive data doesn't leak out of corporate boundaries. Modern DLP solutions expand beyond the perimeter and integrate with cloud providers and directly with user devices.

Data Masking and Redaction

Data Masking and Redaction tools are like the blurring effect on a video. They conceal data or redact it so that only those who have a need to know can see the full dataset.

WAF Deployment Models

WAFs can be deployed in various ways, including on-premises, native cloud, cloud-virtual, inline reverse proxy, transparent proxy/network bridge, out-of-band, multitenancy, single tenancy, software appliance-based, and hybrid. The choice of deployment model depends on your specific needs and environment.

Designing a Comprehensive Network Security Solution

When designing a comprehensive network security solution, it's important to consider all the components and how they work together. This includes WAFs, API Gateways, Bot Management and Mitigation systems, RASP, CDNs, DLP solutions, and Data Masking and Redaction tools. Each of these technologies plays a unique role in securing your applications and data.

Summary

Web Application Firewalls are an essential part of any cybersecurity strategy. But they work with others. They're part of a team that includes other technologies that together provide a robust defence-in-depth strategy. So, when you're planning your cybersecurity strategy, make sure to consider all these components and how they can work together to secure your applications and data.