Demystifying Language AI

-

Andrew Butson

Andrew Butson - 07 Jan, 2024

Have you ever wondered how your smartphone’s voice assistant understands your commands or how translation apps convert text between languages with such accuracy? The magic behind these technologies lies in Language AI, a fascinating field that’s evolved dramatically over the past few decades.

In this blog post, we’ll embark on a journey through the evolution of Language AI, exploring how we’ve moved from simple text representations to the sophisticated large language models (LLMs) that power today’s cutting-edge applications. Let’s dive in!

What Is Language AI?

Before we delve into the history, let’s clarify what we mean by Language AI. At its core, Language AI is a subfield of artificial intelligence that focuses on enabling machines to understand, process, and generate human language. It’s often used interchangeably with natural language processing (NLP).

As defined by one of the AI pioneers, John McCarthy:

“[Artificial intelligence is] the science and engineering of making intelligent machines, especially intelligent computer programs. It is related to the similar task of using computers to understand human intelligence, but AI does not have to confine itself to methods that are biologically observable.” — John McCarthy, 2007

Language AI encompasses a wide range of technologies, from simple keyword spotting to complex language generation. While the term large language model often refers to models with vast numbers of parameters, in this context, we’ll be exploring models that have significantly impacted the field, regardless of their size.

A Recent History of Language AI

The Challenge of Representing Language

Language is inherently complex and unstructured. Unlike numerical data, textual information doesn’t easily translate into zeros and ones that computers can process directly. Early in the development of Language AI, a major focus was on finding ways to represent text in a structured format that computers could understand.

Bag-of-Words: The Starting Point

Our journey begins with the bag-of-words (BoW) model—a simple method for representing text that treats a document as an unordered collection of words.

How Does It Work?

Imagine you have two sentences:

- Sentence 1: “Dogs bark at strangers.”

- Sentence 2: “Cats meow at people.”

Step 1: Tokenization

First, we split each sentence into individual words (tokens):

- Sentence 1: [“dogs”, “bark”, “at”, “strangers”]

- Sentence 2: [“cats”, “meow”, “at”, “people”]

Step 2: Vocabulary Creation

Next, we compile a list of all unique words from both sentences to create a vocabulary:

- Vocabulary: [“at”, “bark”, “cats”, “dogs”, “meow”, “people”, “strangers”]

Step 3: Vector Representation

We then create vectors for each sentence, counting the occurrence of each vocabulary word:

- Sentence 1 Vector: [1, 1, 0, 1, 0, 0, 1]

- Sentence 2 Vector: [1, 0, 1, 0, 1, 1, 0]

Limitations

While BoW is straightforward, it ignores word order and context, treating “Dogs bark at strangers” the same as “Strangers bark at dogs,” which clearly have different meanings.

Introducing Dense Vector Embeddings

To capture the meaning and context of words, researchers developed methods to represent words in continuous vector spaces—word embeddings.

Word2Vec: A Breakthrough

In 2013, Word2Vec was introduced, using neural networks to learn word associations from large datasets.

Key Concepts

- Continuous Representation: Words are represented as dense vectors in a high-dimensional space.

- Semantic Similarity: Words with similar meanings are positioned closer together.

Example

Consider words like “king,” “queen,” “man,” and “woman.” In the embedding space, relationships can be captured such that:

- Vector(“king”) - Vector(“man”) + Vector(“woman”) ≈ Vector(“queen”)

Understanding Embeddings

Embeddings aren’t limited to words. We can create embeddings for sentences, paragraphs, or even entire documents, capturing more nuanced meanings.

Context Matters: Recurrent Neural Networks

While embeddings improved word representations, they didn’t fully capture context within sentences. This led to the development of Recurrent Neural Networks (RNNs).

How RNNs Work

RNNs process sequences by maintaining a hidden state that captures information about previous elements. This makes them suitable for tasks like language modeling and translation.

Example: Sentiment Analysis

Consider the sentences:

- “I am not happy with the service.”

- “I am not unhappy with the service.”

An RNN can capture the difference in sentiment between these sentences by considering the sequence of words.

Challenges

RNNs struggle with long-term dependencies due to issues like vanishing gradients, making it difficult to capture context over longer sequences.

Attention Mechanisms: Focusing on What’s Important

To overcome RNN limitations, the attention mechanism was introduced. Attention allows models to focus on specific parts of the input when generating output.

How Attention Works

In machine translation, for example, attention helps the model align words in the source language with words in the target language, improving translation accuracy.

Example

Translating “The weather today is beautiful” to French:

- The model can align “weather” with “temps” and “beautiful” with “beau,” ensuring the correct translation.

Transformers: Revolutionizing Language AI

In 2017, the Transformer architecture took the field by storm. By relying entirely on attention mechanisms and discarding recurrence, Transformers enabled parallel processing and improved handling of long-range dependencies.

Key Components

- Self-Attention: The model considers all words in the input when processing each word, capturing relationships regardless of distance.

- Positional Encoding: Since Transformers don’t process inputs sequentially, positional encoding is added to provide information about the position of words.

Impact

Transformers have become the backbone of many state-of-the-art models, dramatically improving performance on a range of tasks.

BERT: Deep Bidirectional Understanding

Building on Transformers, BERT (Bidirectional Encoder Representations from Transformers) was introduced in 2018. BERT is designed to understand the context of a word based on all of its surroundings (left and right context).

Key Features

- Masked Language Modeling: During training, some words are masked, and the model learns to predict them, encouraging it to understand context.

- Next Sentence Prediction: The model also learns relationships between sentences.

Applications

BERT excels at tasks like question answering, sentiment analysis, and named entity recognition.

Example

For the sentence “The bank will not approve the loan,” BERT can understand that “bank” refers to a financial institution, not a riverbank, based on context.

GPT and the Era of Large Language Models

On the generative side, the GPT (Generative Pre-trained Transformer) series has pushed the boundaries of what’s possible with language generation.

GPT Highlights

- Unidirectional: GPT models read text left-to-right, focusing on generating the next word in a sequence.

- Transformer Decoder Architecture: Uses Transformer decoders with masked self-attention to prevent the model from seeing future tokens.

- Scaling Up: GPT-3 has 175 billion parameters, enabling it to perform tasks without task-specific training data.

Innovations

- Few-Shot Learning: GPT-3 can perform tasks with minimal examples provided in the prompt.

- Zero-Shot Learning: It can handle tasks it hasn’t explicitly been trained on.

Example

Ask GPT-3 to “Write a poem about the ocean,” and it can generate a coherent and creative piece without prior training on poetry tasks.

The Rise of Generative AI: 2023 and Beyond

The release of models like ChatGPT and GPT-4 in 2023 marked a significant milestone in making AI accessible to the public.

ChatGPT

- Conversational AI: Designed for interactive conversations, understanding context, and maintaining dialogue.

- Instruction Following: Fine-tuned using reinforcement learning from human feedback (RLHF) to adhere to user instructions.

Impact

These models have found applications in customer service, content creation, education, and more, democratizing AI capabilities.

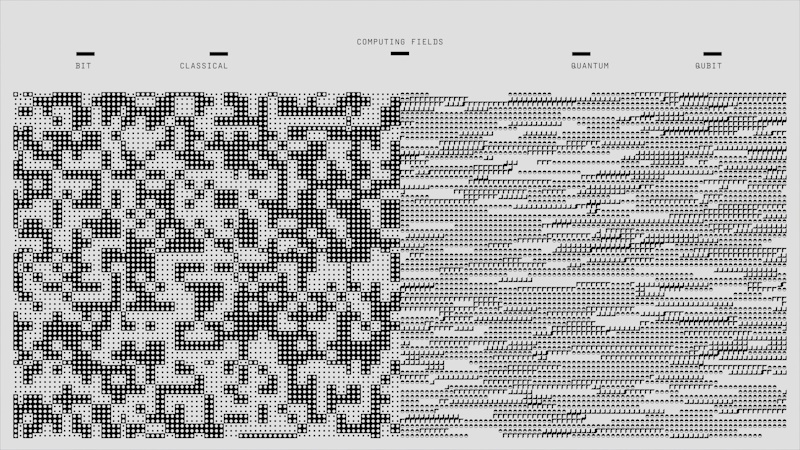

Understanding “Large” in Large Language Models

The term Large Language Model can be ambiguous. It’s not just about the number of parameters but also about the model’s capacity to generalize and perform various tasks.

Considerations

- Size Isn’t Everything: Smaller models trained effectively can outperform larger, less optimized models.

- Accessibility: Models that run efficiently on consumer hardware contribute to broader adoption.

Open vs. Proprietary Models

- Open Models: Accessible to the public, allowing for transparency and collaboration. Examples include models from EleutherAI and Hugging Face.

- Proprietary Models: Developed by companies like OpenAI, offering APIs for access but not releasing model weights.

The Two-Step Training Paradigm

Training LLMs typically involves two main steps:

1. Pretraining: Learning Language Representations

- Objective: Teach the model general language understanding by predicting missing words or the next word in large text corpora.

- Data: Massive datasets from books, websites, and articles.

2. Fine-Tuning: Specializing for Tasks

- Objective: Adapt the pretrained model to specific tasks like translation, summarization, or question answering.

- Methods:

- Supervised Fine-Tuning: Training on labeled datasets for the task.

- Reinforcement Learning from Human Feedback (RLHF): Using human preferences to shape model outputs (used in ChatGPT).

Example

Fine-tuning a pretrained model on medical records to assist doctors with patient summaries while maintaining privacy and compliance.

Real-World Applications of Language AI

1. Sentiment Analysis

- Use Case: Analyzing customer reviews to gauge satisfaction.

- Approach: Using models like BERT to classify text as positive, negative, or neutral.

2. Content Generation

- Use Case: Automating report writing or generating news articles.

- Approach: Leveraging GPT models to produce coherent and contextually relevant text.

3. Language Translation

- Use Case: Real-time translation services.

- Approach: Using transformer-based models for accurate translations that consider context and idioms.

4. Chatbots and Virtual Assistants

- Use Case: Customer support, scheduling, and personal assistants.

- Approach: Training conversational models to understand and respond to user queries effectively.

5. Code Generation

- Use Case: Assisting developers by generating code snippets from comments or descriptions.

- Approach: Specialized models trained on programming languages.

Ethical Considerations and Responsible AI

As Language AI becomes more integrated into society, it’s crucial to address ethical challenges:

Bias and Fairness

- Issue: Models can learn and amplify biases present in training data.

- Solution: Implementing bias detection and mitigation strategies, diversifying training data.

Transparency and Accountability

- Issue: Users may be unaware they’re interacting with AI.

- Solution: Clearly disclosing AI involvement, ensuring explainability.

Misinformation and Harmful Content

- Issue: Potential for generating misleading or harmful information.

- Solution: Content filtering, establishing guidelines for safe deployments.

Privacy and Data Security

- Issue: Handling sensitive information responsibly.

- Solution: Anonymizing data, complying with regulations like GDPR.

Getting Hands-On: Generate Your First Text with an LLM

Ready to see Language AI in action? Let’s generate text using an open-source model.

We’ll use the GPT-2 model for this example.

Setup

First, install the necessary libraries:

pip install transformers torch

Load the Model and Tokenizer

from transformers import AutoModelForCausalLM, AutoTokenizer

model_name = "gpt2" # Using GPT-2 small model for simplicity

tokenizer = AutoTokenizer.from_pretrained(model_name)

model = AutoModelForCausalLM.from_pretrained(model_name)

Generate Text

Define a prompt and generate text:

prompt = "In a world where technology knows no bounds,"

input_ids = tokenizer.encode(prompt, return_tensors="pt")

# Generate text continuation

output = model.generate(

input_ids,

max_length=50,

num_return_sequences=1,

no_repeat_ngram_size=2,

early_stopping=True

)

# Decode and print the result

generated_text = tokenizer.decode(output[0], skip_special_tokens=True)

print(generated_text)

Sample Output:

In a world where technology knows no bounds, the lines between reality and virtuality have blurred. People are connected to each other through a vast network of digital interfaces, sharing experiences and emotions in ways never before imagined.

Looking Ahead: The Future of Language AI

The rapid advancements in Language AI point to an exciting future:

- Multimodal Models: Integrating text, images, and audio for richer interactions.

- Personalization: Tailoring models to individual preferences while respecting privacy.

- Improved Efficiency: Developing models that deliver high performance with lower resource demands.

From the simplicity of bag-of-words models to the sophistication of large language models, Language AI has transformed how we interact with technology. These advancements have unlocked new possibilities in communication, automation, and problem-solving.

However, with great power comes great responsibility. As practitioners and users of AI, we must ensure that we’re fostering inclusive, ethical, and transparent practices.

Thank you for joining me on this journey through the evolution of Language AI. The field continues to evolve rapidly, and I can’t wait to see where it takes us next!